Blog

Okteto vs mirrord: Choosing the Right Development Environment Solution for Your Team

When evaluating Kubernetes development solutions, platform teams face a critical choice: invest in a tool that solves one problem well, or a platform that...

The Three Pillars of Trust for AI in Software Development

AI adoption is up but trust is down. Three pillars platform teams need to enable confident AI development....

Run AI Agents at Scale | Introducing Okteto AI Agent Fleets

AI agents need more than a terminal. Okteto gives them environments to execute, validate, and deliver value instantly...

Why Every Development Environment Eventually Falls Short. And Why That’s Okay.

Even the best development environments hit a wall at some point. What you do next is the important part...

Why Flexibility Is Key to Scaling Development Environments

Learn how to evolve your environment strategy as your team and architecture grow....

Shared Environments Could Be Costing Your Company Millions

Testing code shouldn’t feel like booking a conference room. And yet, in many engineering teams, that’s exactly what it’s like. Modern software development...

The Ultimate Platform Stack: GitLab CI/CD + Okteto Development Environments

In every software organization, platform teams sit at the intersection of innovation and scale. Your mission is to enable engineers to move faster without...

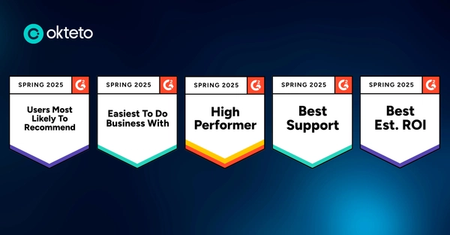

Okteto Development Environments Named High Performer By G2

We’re thrilled to share that Okteto has earned 7 badges in G2’s Spring 2025 Reports, highlighting our leadership across DevOps and Container Orchestration...

What Are Ephemeral Environments and Why Do Platform Teams Need Them?

In this post, learn everything you need to know about ephemeral environments from what they are, how they work and their benefits. ...

Platform Tooling Landscape | 21 Best Platform Engineering Tools in 2025

Today, having the right platform engineering tools in place isn’t just a “nice-to-have”—it’s a necessity. But navigating the platform tooling landscape is just the first step....

Transforming Developer Productivity For Our Customers

At Okteto, our core focus this past year was on building features that directly solve critical challenges faced by platform engineering teams....

Announcing Okteto CLI 3.0

We are happy to announce the release of Okteto CLI 3.0! This release marks a significant milestone, bringing together the most impactful features we’ve...

Enhance CI Pipelines with Dagger and Okteto Preview Environments for a Better Developer Experience

Continuous Integration (CI) is a cornerstone of modern software development, ensuring that code changes are automatically tested and merged, reducing the...

Introducing Okteto Insights: Essential Metrics For Platform Teams

Platform Teams can’t succeed in improving the DevX unless they have clear metrics that help them understand the outcome of their initiatives. To help platform...

Benefits of Platform Engineering

In a world where technology advances at lightning speed, it is imperative for organizations to equip themselves with the best practices in the software...

Platform Engineering Roadmap: Best Practices You Need To Know

In our previous blog post, we explored how cultivating a customer-centric mindset can help you become a better platform engineer. Now, let's delve into...

Platform Engineering Versus Devops

Platform engineering has become an increasingly popular topic in the software development industry. But a lot of people are still confused on what's the...

Empowering Teams: The Shift in Okteto's Development Focus

In the early stages of innovation, Okteto introduced a free SaaS tier, Okteto Cloud, to provide individual developers with a platform for remote development...

Automating Development Environments and Infrastructure with Terraform and Okteto

If you're developing a modern cloud-native app, it's very likely that your app is leveraging some cloud resources. These could be things like storage buckets,...

Improving Development Velocity by Building a Better DevX

Before automating environments for the ultimate modern development experience, platform providers (builders) need a vision and game plan. This guide...

How To Speed Up Container Image Builds

Okteto comes with a build service that enables developers to offload image building steps to the cloud. This eliminates the need for Docker to be running...

Simplifying Launching Development Environments With Okteto Catalog

Platform engineering is all about creating "golden paths" for developers. These golden paths are streamlined processes that allow developers to focus on...

Automate Provisioning Any Dev Resource on Any Cloud Provider With Pulumi and Okteto

The Value It is common in today's landscape to build microservices-based applications that leverage resources like RDS databases, storage buckets, etc...

How Developers Can Seamlessly Collaborate When Building Microservice Apps

Building microservices based applications is inherently challenging. Given the multitude of components involved, it is unrealistic to expect any individual...

Slash Your Kubernetes Costs During Development

Using Kubernetes can be quite costly, to be frank. Regardless of the cloud provider you choose for your cluster, there are expenses associated with the...

Making Your Helm-Packaged Applications Ready for Cloud Native Development with Okteto

Helm, often referred to as the "package manager for Kubernetes," is a powerful tool that streamlines the installation and management of cloud native applications...

Five Challenges with Developing Locally Using Docker Compose

After the popularization of containers, a lot of the development workflow started leaning on Docker Compose. Developers would have a Docker Compose file...

The Way You're Using Kubernetes Clusters During Development Is Killing Productivity!

Transitioning from development to production is always challenging. There's that underlying fear of overlooking something crucial, such as misconfigurations...

Okteto Achieves SOC 2 Type 1 Compliance

We’re excited to announce that Okteto has successfully passed a third-party audit and received a SOC 2® Type I report. This achievement is more than just...

Using ArgoCD With Okteto for a Unified Kubernetes Development Experience

ArgoCD is a powerful tool for continuous deployment that leverages Git repositories as the ultimate source of truth for managing Kubernetes deployments....

Ashlynn Pericacho

Ashlynn Pericacho Ramiro Berrelleza

Ramiro Berrelleza Joey Davidson

Joey Davidson Pablo Chico de Guzman

Pablo Chico de Guzman Arsh Sharma

Arsh Sharma David Butler

David Butler